➡️Video Datasets

Providing video-based datasets

Ta-da is designed to enable the creation of high-quality video datasets, which are crucial for a wide range of AI applications.

Our platform supports the comprehensive collection and annotation of video data, which is fundamental for training sophisticated machine learning models in fields such as computer vision, autonomous driving, and video analytics.

Key features of video dataset creation capabilities include:

Bounding Boxes: Precise annotation of objects within video frames. This feature is essential for training AI models to detect and track objects accurately across video sequences.

Action Recognition: Detailed labeling of specific actions or activities within video segments. This allows for the development of AI models capable of behavior analysis, activity recognition, and surveillance.

Scene Segmentation: Advanced division of video frames into distinct segments. This enables detailed analysis and classification of various components within a scene. This is crucial for understanding complex environments and improving scene understanding models.

Object Tracking: Robust tracking of object movements across multiple frames, which is essential for developing effective tracking algorithms used in various applications such as security, sports analysis, and autonomous navigation.

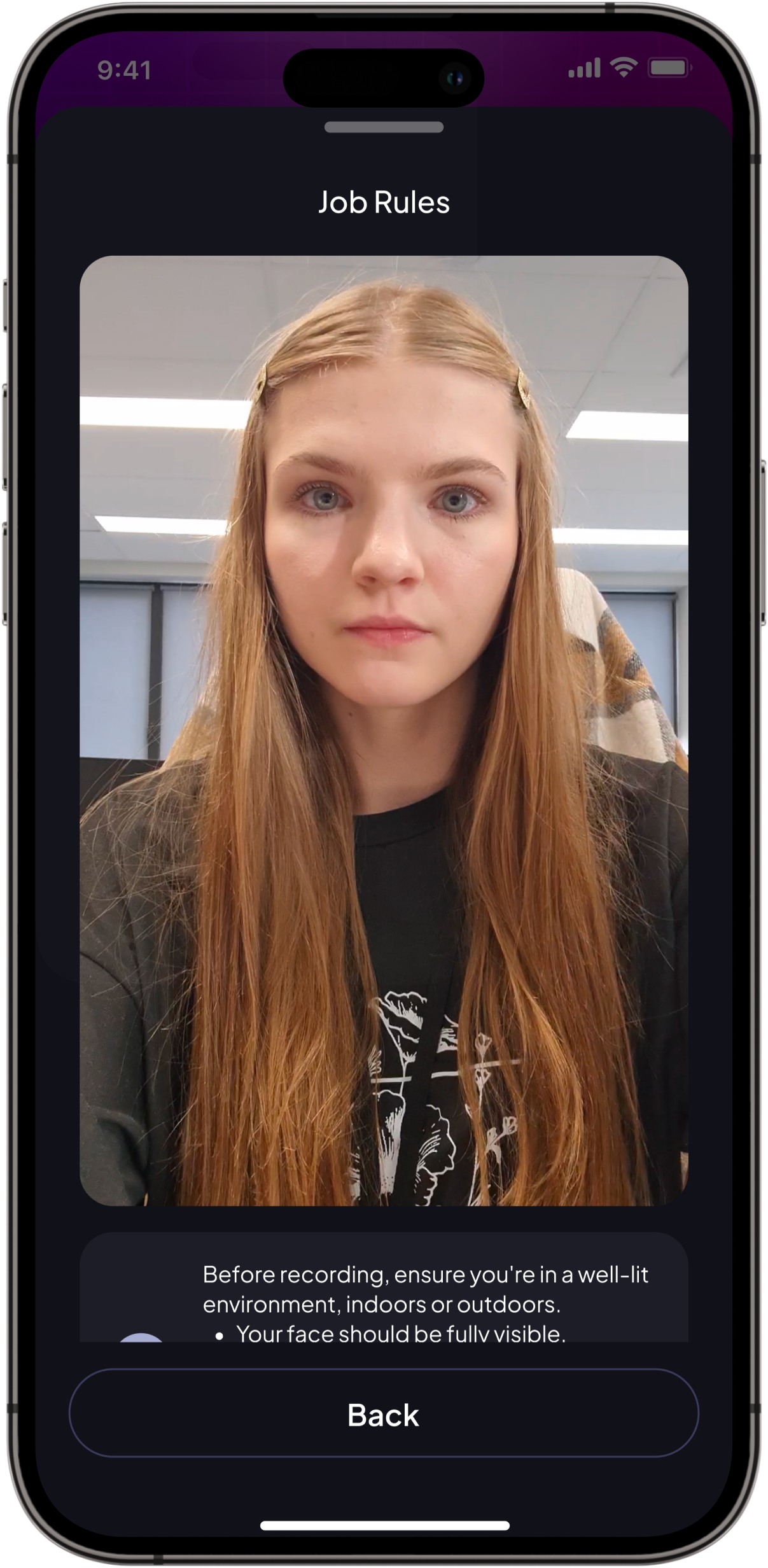

Biometric Analysis (KYC): Integration of biometric data for Know Your Customer (KYC) processes. This includes facial recognition and other biometric verifications, ensuring accurate identification and authentication of individuals within video datasets.

We have already successfully created a video dataset for Identt, which includes videos specifically designed to train KYC algorithms. This dataset enhances the accuracy and reliability of biometric verification systems, demonstrating our capability to deliver specialized datasets for complex AI applications.